Today, many marketing decisions and plans rely on behavioral data – direct reporting of consumers’ activities through point-of-sale systems, set-top boxes, and other sources. But sometimes, there is just no substitute for asking people what they think, feel, want, and need. Without knowing the why behind behavior and brands, marketers have a hard time drawing up wise strategies or devising smarter products and messages.

But the business of asking people questions has changed radically in recent years. Survey-taking bots and click farms have flooded the market with fraudulent responses. At the same time, inattentive respondents deliver answers that may be partially correct but still drive inconsistencies and poor data quality.

The deeper that research professionals dig into these murky issues, the more they see a need for action – because the status quo has been slowly slipping into risky territory.

A radically altered survey environment

The truth is that there are no agreed-upon quality metrics for suppliers and buyers to consult. Cost pressures too frequently turn data quality into a low-priority consideration. Additionally, sample providers are increasingly turning to shared sample pools, as well as non-empaneled participants, to find much-needed respondents. Amid this scramble, transparency is often lacking, making it difficult to understand panelist motivation.

As a cautious buyer of third-party sample, GfK has always worked to manage data quality; for example, we established a tier system to rank sample providers based on internal and supplier-side quality metrics. In the process, we defined rules under which sample sources were (and were not) allowed to participate in our surveys.

But the survey sample environment has deteriorated more dramatically in just the past 12 to 18 months. While the tier system alone served us well in the past, in 2021, we saw incidents of fraud and issues with inattentive respondents spiking substantially. At the same time, sample supply was suppressed due to the economic fall-out from the pandemic – with demand outpacing supply for the first time.

Mindful of both quality concerns and sample feasibility issues, GfK decided to take an in-depth look at what is really happening in the sample provider space. How significant are the data quality issues among key respondent sources? Are long-standing assumptions about different sample sources still legitimate? And are there new ways to assess and manage respondent providers to create an optimal mix using realistic yet flexible standards?

No supplier dominates in sample quality

In December 2021, GfK North America launched a survey to research sample sources from three major industry providers, including empaneled and affiliate respondents. Our 15-minute survey explored a variety of topics, using questions from Federal government surveys and our own MRI-Simmons Survey of the American Consumer to serve as benchmarks. We conducted two primary analyses:

- Comparing quality across sample providers, using both internal and external quality tools

- Determining the effectiveness of these quality tools at minimizing bias as measured against accepted benchmarks

GfK’s study found that, from a variety of different perspectives, none of the three suppliers dominated in terms of the quality metrics. GfK further found:

- comparable levels of overstatement on most benchmarked profile points.

- surprising similarities between empaneled and affiliate sources.

- affiliate sources rivaling – even besting — some empaneled sources.

- quality fails over-indexing for key demographics – such as men (especially those 30 to 34), Hispanics, and people from low-income households – across sample sources.

A crucial conversation for the industry

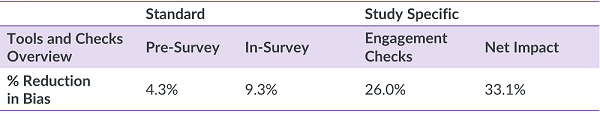

Based on its findings, GfK has devised a new, multi-pronged approach to online data quality – one that delivers, on average, a 33% bias reduction and provides confidence to broaden our sample pool. Each of our tools (internal and external) identifies different types of bad quality data and delivers an incremental reduction in bias. Because there is limited overlap between them, each tool plays an important part in the equation. GfK now understands and employs these tools as part of its mandatory methodology – ensuring a systematic, repeatable approach to data quality.

Even with all these efforts, problem respondents can slip through the cracks – a fact of life in today’s research world. Staying a step ahead of sample quality shifts is a continuing challenge for the insights industry. But the more that sample suppliers and buyers work together to address the problem head-on, the more comfortable marketers will be when basing decisions on these crucial insights.

There will always be a need to get inside consumers’ thoughts, opinions, perceptions, and feelings, and making sure that the process is as seamless and reliable as possible should be a top priority for the marketplace as a whole. GfK plans to do its part with continued tracking of quality metrics and close collaboration with sample providers over time to stay a step ahead.

Stacy Baker is Vice President Operations, Americas at GfK. Over her 20+ tenure, Stacy has held senior roles across the organization. As head of Operations for the Americas, she leads an international team that successfully delivers high quality data to our clients every day. Contact Stacy at Stacy.Baker@gfk.com.