(Re-post from the “Eye Tracking the User Experience” Blog. Aga Bojko (VP, User Experience) is currently writing Eye Tracking the User Experience, A Practical Guide, to be published by Rosenfeld Media in 2013.)

“Participant-free eye tracking” has been around for a while but is still attracting quite a bit of attention. Websites such as EyeQuant, Feng-GUI, and Attention Wizard allow you to upload an image (e.g., a screenshot of a web page) and obtain a visualization (e.g., a heatmap) showing a computer-generated prediction of where people would look in the first five seconds of being exposed to the image. No eye tracker, participants, or lab required!

These companies claim a 75 – 90% correlation with real eye tracking data. Unfortunately, I couldn’t find any research supporting their claims. If you know of any, I’m all ears.

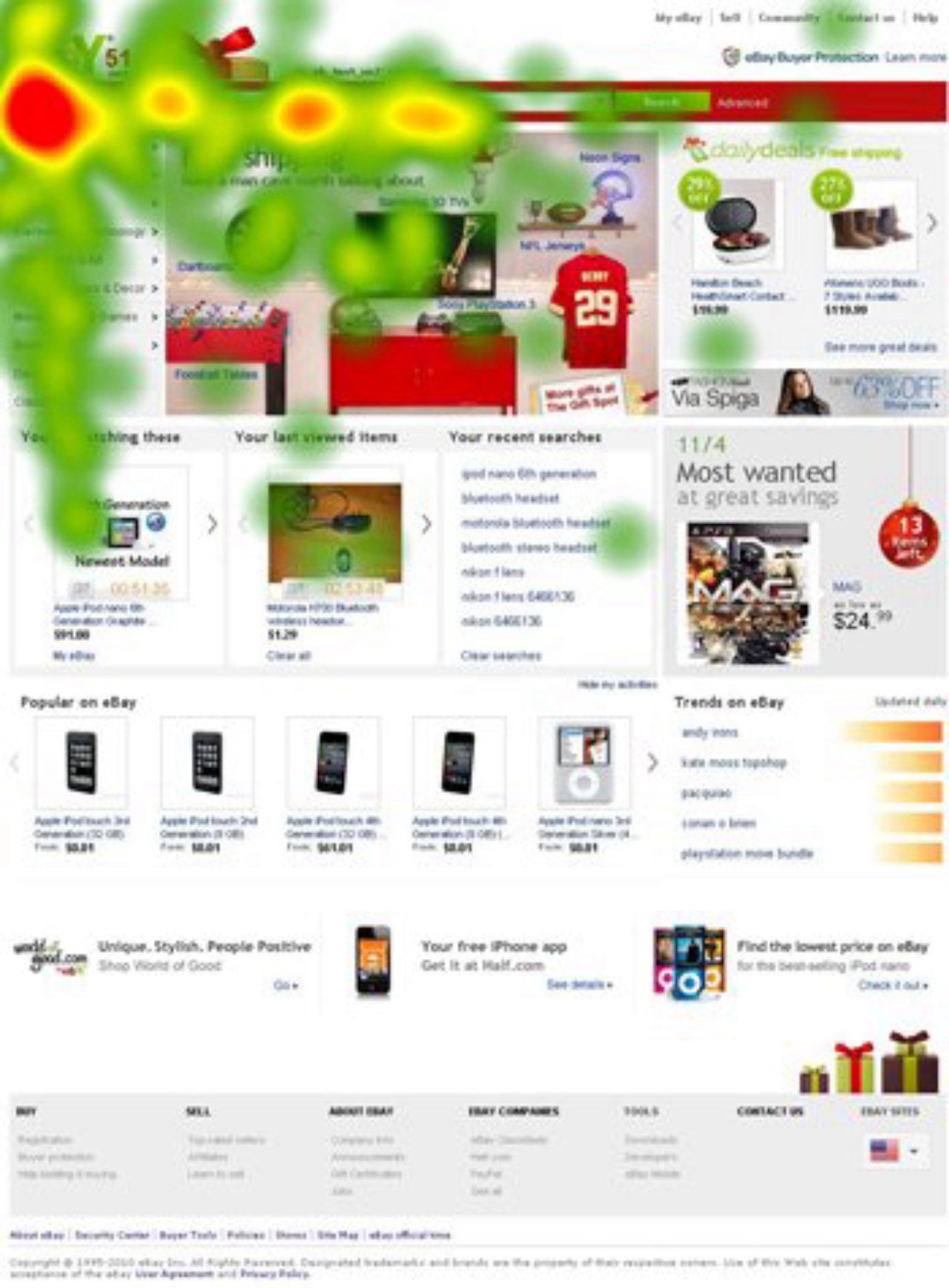

To satisfy my curiosity about the accuracy of their predictions, I submitted an eBay homepage to EyeQuant, Feng-GUI, and Attention Wizard and obtained the following heatmaps:

Attention heatmap simulation by Feng-GUI

Attention heatmap simulation by Attention Wizard

Attention heatmap simulation by EyeQuant

I then compared these heatmaps to the initial five second gaze activity from a study with 21 participants tracked with a Tobii T60 eye tracker:

Attention heatmap based on real participants (red = 10+ fixations)

First, let me just say that I’m not a fan of comparing heatmaps just by looking at them because visual inspection is subjective and prone to error. Also, different settings can produce very different visualizations, and you can’t ensure equivalent settings between a real heatmap and a simulated one.

With that in mind, let’s take a look at the four heatmaps. The three simulations look rather similar, don’t they? But the “real heatmap” seems to differ from the simulated ones quite a bit. For example, the simulations predict a lot of attention on images (including advertising), whereas the study participants barely even looked at many of those elements. Our participants primarily focused on the navigation and search, which is not reflected in the simulated heatmaps.

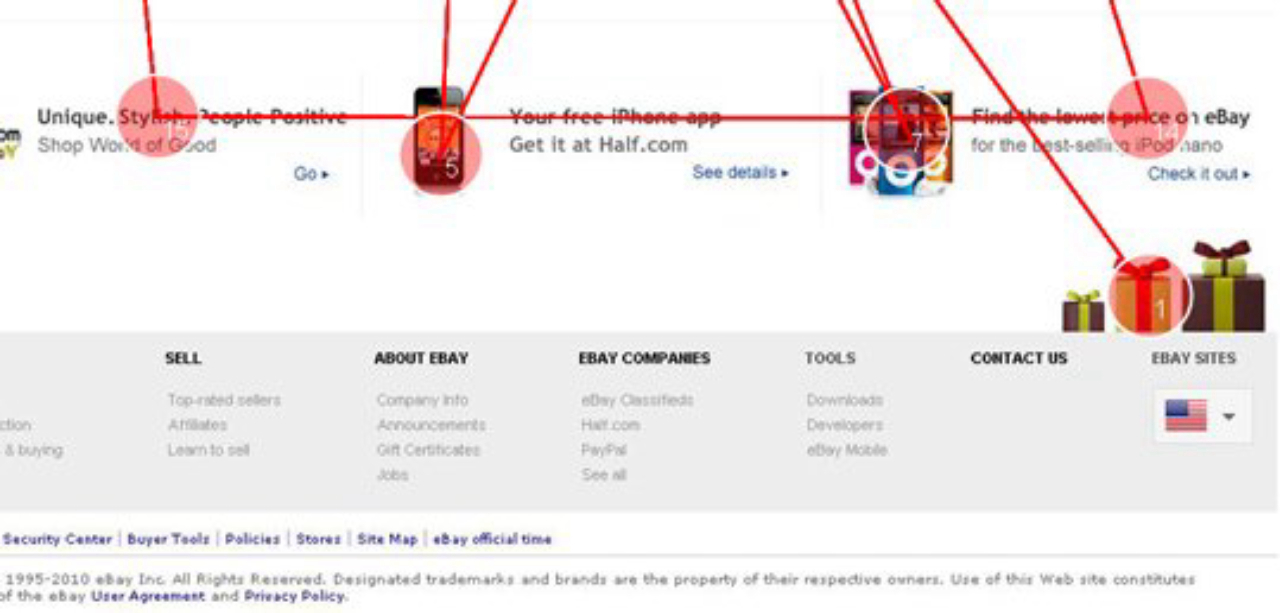

The simulations also show a fair amount of attention on areas below the page fold but the study participants never even scrolled! In addition to a heatmap, Feng-GUI produced a gaze plot indicating the sequence with which users would scan the areas of the page. The first element to be looked at was predicted to be a small image at the bottom of the page, well below the fold:

Gaze plot simulation by Feng-GUI (the numbers in the circles indicate the order of fixations)

I wish we could compare the simulated gaze activity to the real gaze activity quantitatively but that doesn’t appear to be possible. Even though Feng-GUI and EyeQuant provide some data (percentage of attention on an area of interest) in addition to data visualizations, it’s unclear what measure these percentages are based on:

Percentage of attention on the navigation predicted by EyeQuant

But even based on me just eyeballing the results, I know I wouldn’t be comfortable making decisions based on the computer-generated predictions.

The simulations have limited applicability and can by no means replace real eye tracking. They make predictions mostly based on the bottom-up (stimulus-driven) mechanisms that affect our attention, failing to take into account top-down (knowledge-driven) processes, which play a huge role even during the first few seconds.

Computer-generated visualizations of human attention may work better for pages with no scrolling and under the assumption that users will be completely unfamiliar with the website and have no task/goal in mind when visiting it. How common is this scenario? Not nearly as common as the sellers of participant-free eye tracking would like us to believe.

![Understanding your audience: The power of segmentation in retail [podcast]](https://nielseniq.com/wp-content/uploads/sites/4/2025/07/Podcast-Understanding_your_audience-The_power_of_segmentation_in_retail-mirrored.jpg?w=1024)